Virtual Tübingen

The motivation for the Virtual Tübingen project was the desire to create a natural, realistic and controllable virtual environment for the investigation of human spatial perception. The goal of this project was to create a highly realistic model of the city of Tübingen, through which one can move in real time. The project was built on the expert knowledge in the fields of virtual reality and navigation that has been developed in our department beetween 1995-2000.

The "Virtual Tübingen" Project

The Virtual Tübingen project was motivated by the emerging need for a naturalistic, controllable environment for investigating human spatial cognition. The goal was to build a highly realistic virtual model of Tübingen through which one can move in real time. This project was built on experience with Virtual Reality and Navigation gathered in this lab over the past couple of years.

Our choice to model Tübingen as a virtual city had several reasons: The first major reason relates to the observation that the center of Tübingen has a rather complex structure: there are considerable height differences, the streets often tend to be curved and have varying width, and the houses are rather different with varying facades. This high degree of complexity provides a much more interesting environment for conducting navigation experiments than highly regular cities like e.g. New York City offer. The second major reason was that we would like to achieve a high degree of visual realism, which in our case is done by combining photographs and high-quality texture mapping with three-dimensional geometry. The process of making photographs of houses and streets as well as gaining access to architectural data was of course easiest for this local town. Another reason for chosing Tübingen was that this made it particularly easy to find people who are well trained in navigating through the real version of Tübingen thus providing us with good comparison of real-world and virtual experiments.

Virtual Tübingen and the Speed of Light

VT and the speed of light in collaboration with the Institute for Astronomy and Astrophysics Tuebingen (only in German)

Natural Environments and Experimental Control

Being interested in how humans navigate in daily life, we would like to use the real world as the place of action. However, the drawback of running experiments in natural environments, like cities for instance is that the experimenter has almost no control over the environment and the way the observers (the subjects in our experiments) interact with it.

So far, the common solution to this problem has been to use very impoverished but well controlled laboratory scenes (like a block world or simple mazes). For a long time now the approach in psychophysical experiments has been a reductionist one: Remove more and more detail from the scene until you are able to describe in full detail what is left, yielding full control of the experimental setting. Recently, however, it has become more and more apparent that it is often extremely difficult if not impossible to generalize results from these types of experiments to our perception and behaviour in the real world.

View of the Kirchgasse towards the market place

Positive Impact of VR on Investigating Human Performance and Perception

The enormous increase in performance of graphic hardware and display technology over the years has brought us the power to create virtual environments that are capable of producing sensory experiences of unprecedented realism. It is true that 'sensory' usually means 'visual' or 'auditory' but that combination in itself seems to be powerful enough to start with. Furthermore, if properly set up, the interaction of the observer with the virtual environment can provide haptic, tactile and proprioceptive experiences as well. To summarize, these techniques offer us the possibility to combine extremely detailed, complex, interactive, multi-sensory scenes with a very high level of control: an almost optimal setting for our experiments. We would like to forward the view that this new approach, though extremely demanding in terms of equipment, skills, time, manpower, and creativity might ultimately provide us with a highly valuable and more direct way of probing and understanding human cognition and behaviour. Providing support for this view has become part of our scientific mission.

Building Virtual Tuebingen

The creation of Virtual Tübingen consisted of two processes: processing of geometry (the three-dimensional 'structure' of the architecture) and processing of textures (the 'images', that is, photographs of the buildings and structures providing information about their visual appearance). For a house this means that creating the walls is separated from creating the textures that will be put onto these walls.

This division was motivated by the fact that by combining lower-resolution geometric data (which we had for most of the houses from architectural models of Tübingen) and high-resolution textures (which we get by simply taking photographs) we can create a very realistic model of Tübingen's houses and streets. This approach of separating geometry and texture is different from, for example, modeling of landscapes which would have required a different approach (e.g., using high resolution aerial photographs).

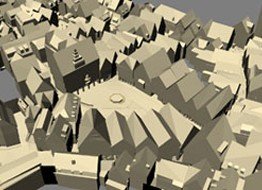

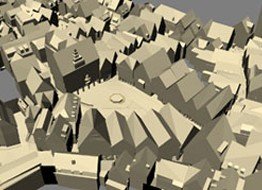

The model includes approx. 200 houses and covers an area of approx. 500x150 m.

Model of Virtual Tübingen without Texture

Geometry Processing

The basic input data that we have consist of architectural drawings provided by the city administration. These data were gathered some years ago when a 1:400 scale wooden model of the center of Tübingen was built. For almost every house we have floor plans for each layer (horizontal views) as well as drawings of the front and back of the house (vertical views). An example can be seen below. These drawings were the only data we had which meant that roofs, dormers and oriels were often only partially specified, forcing us to examine the real house and re-model those structures. An important point in this context is that the houses in Tübingen are quite irregular; e.g. it is very common for the upper floors to stick out a little relative to the lower floors.

The construction of the geometry consists of two steps: First we collected the appropriate drawings and used a drawing tablet to indicate relevant points (corners) on each of these. The selection of relevant points was a manual process that included some minor simplifications of the geometry. The vertical views were used to indicate the height of each floor and the roof. The basic assumption that we made was that all walls are vertical and flat. In the large majority of cases this is quite reasonable and simplifies the construction process considerably. Of course, individual adjustments have been made afterwards to make the geometry more realistic. After digitization of the drawings in a second step we used a custom-made software tool which generated a polygonal 3D-model of the house from the specified fiducial points. An additional problem that became apparent at this stage was that the drawings did not have alignment markers on them. This meant that the digitized drawings had to be aligned (shifting and rotating) manually using some assumptions about the actual spatial relationships between the different layers. The model was then converted to a format, which could be loaded into our 3D modeling program. We used this modeler to improve the geometry a little (filling gaps and taking care of some things the first program could not take care of, such as building the roof).

At this stage we have a triangulated wireframe model of the building (see example below).

VRML-model of a wired building

Texture Processing

Texture processing was the most time-consuming part of the whole process. The problems started already with making pictures. In the center of Tübingen there are many places where the houses are typically 20 meters high while the streets are only 5 meters wide. This causes some problems with shadowing and obstacles, but it also means that even with a wide-angle lens one cannot photograph a house frontally, which causes considerable perspective distortion. Therefore we needed to write a software for perspective correction which uses the output of the geometry process and the photograph of the house.

The major problem that was left after correcting the perspective of the texture was the variability in lighting and contrast that we experienced between pictures of different floors in one house and between different houses. This is due to shadowing and the variability of the outdoor lighting conditions and could only be solved satisfactorily by manual adjustments of the textures to achieve an overall homogeneous look.

Texture with perspective distortion

Corrected texture

A textured house looks like this

Facilities

PanoLab and TrackingLab: Setups in which the Virtual Tübingen Modell is mainly used

The half-spherical screen of the PanoLab allows the simulation of large visual fields providing an increased degree of immersion. In the large room of the Tracking Lab, experiments were conducted in which participants can walk through and explore extensive virtual worlds that are displayed via Head-Mounted Displays.

Software

The geometry of Virtual Tuebingen was initially created using Multigen Creator. Textures were processed using Photomodeler and an in-house developed tool called CorTex, to automatically correct distorted images. Later on, the model was converted into the max format, currently any modifications/extensions to the model are done with 3ds Max by Autodesk .

To render and display the Virtual Tuebingen in realtime, a graphics engine based on OpenGL Performer by SGI was developed in-house. This engine then became part of the veLib, and was modified to use pure OpenGL together with the SDL. As a 3d format to export all the data from 3ds max into the veLib, we currently use VRML, since it is easy to use and is supported by many modeling programs.

veLib.kyb.mpg.de

www.libsdl.org

www.multigen.com

www.photomodeler.com

www.autodesk.com

PanoLab

The PanoLab is a wide-area high realistic projection system for interactive presentations of virtual environments. The Cognitive and Computational Psychophysics Department has employed a large screen, half- cylindrical virtual reality projection system to study human perception since 1997. Studies in a variety of areas have been carried out, including spatial cognition and the perceptual control of action. Virtual Reality technology is a perfect tool for these studies. One must, of course, take care that the simulation is as realistic as possible, including in terms of the field of view (FOV) covered. With this setup, we are able to provide visual information to almost all of the human FOV. This has the additional benefit that it allows us to systematically study the influence of specific portions of the FOV on various aspects of human perception and performance.

Towards a Natural Field of View

In 2005, we made a number of fundamental improvements to the virtual reality system. Perhaps the most noticeable change is an alteration of the screen size and geometry. This includes extending the screen horizontally (from 180 to 230 degrees) and adding a floor screen and projector. It is important to note that the projection screen curves smoothly from the wall projection to the floor projection, resulting in an overall screen geometry that can be described as a composition of a cylinder and a sphere. Vertically, the screen subtends 125 degrees (25 degree of visual angle upwards and 100 degrees downwards from the normal observation position).

Furthermore, we enhanced the image generation and projection aspects of the system. By switching from CRT projectors to DILA projectors, we were able to obtain flicker free, high resolution images. The system currently uses four JVC SX21 DILA projectors, each with a resolution of 1400x1050 pixels and a refresh rate of 60Hz. In order to compensate for the visual distortions caused by the curved projection screen as well as to achieve soft-edge blending for seamless overlap areas, we invented and developed the openWARP® technology. Finally, all virtual scenes for this system are now generated by a graphics cluster consisting of four standard PC's with high end state of the art graphics cards.

TrackingLab

The TrackingLab is a large 12x15m hall with a state-of-the-art optical tracking system that

is used for head tracking and motion capture. This setup is mainly used to investigate aspects of human spatial perception, experience, and representation. For example, how we manage to keep track of were we came from, or how spatial environments are stored and accessed in our memory. Furthermore, the setup allows interdependencies between spatial behavior and affective responses to be systematically evaluated and therefore lends itself well to basic architectural research.

Head Tracking

In order to create a Virtual Environment (VE) which participants can freely explore as if they were walking in a real environment, we need to present the VE on a Head Mounted Display (HMD) that the participant wears in front of his/her eyes. To create the illusion of an environment that is fixed in world space, we need to know the precise location

and orientation of the participant's head. This is done by multiple Vicon MX13 systems that send out near infrared light and pick the rays up again as they are reflected by the markers. This results in multiple 2D images, from which, in conjuction with the accurate

calibration of the system, the positions of the markers in 3D-space can be calculated. A large number of cameras, distributed around the entire recording volume, ensures excellent precision of the 3D-reconstruction and avoids errors due to occlusion.

Knowing the precise transformation of the participant's head, one virtual camera position for each eye can be calculated and images from a 3D scene model can be rendered and displayed on the HMD to produce a stereoscopic view from the participant's point of view within the VE. In order to provide a smooth and jitter-free experience, this is done 120 times per second.

Motion Capture

The same tracking principle can be used to record individual markers attached to different body parts of the participant. With a set of 60 markers it is possible to record the full body motion of a person. It is also possible to attach small markers to a person's face or hand and capture very detailed motion data. This can be used as training data for action recognition system, for computer animations of 3D avatars or even for studying the interaction of two people in VR.

Related Scientific Publications

Conference papers:

Neth C, Souman JL, Engel D, Kloos U, Bülthoff HH and Mohler BJ :

"Velocity-Dependent Dynamic Curvature Gain for Redirected Walking". IEEE Virtual Reality (VR) 2011, 1-8. (2011)

Meilinger T, Knauff M and Bülthoff HH:

"Working memory in wayfinding - a dual task experiment in a virtual city". Proceedings of the 28th Annual Conference of the Cognitive Science Society, 585-590 (2006)

Meilinger T, Franz G and Bülthoff HH:

"From Isovists via Mental Representations to Behaviour: First Steps Toward Closing the Causal Chain". In: Spatial Cognition '06, Space Syntax and Spatial Cognition Workshop, Universität Bremen, Bremen, Germany, 1-16.

Riecke BE:

"Simple User-Generated Motion Cueing can Enhance Self-Motion Perception (Vection) in Virtual Reality". VRST ‘06: Proceedings of the ACM symposium on Virtual reality software and technology, 104 - 107, ACM Press, New York, NY, USA (11 2006)

Mohler B, Riecke B, Thompson W and Bülthoff HH:

"Measuring Vection in a Large Screen Virtual Environment". Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization (APGV), 103 - 109, ACM Press, New York, NY, USA (10 2005)

Riecke BE, Schulte-Pelkum J, Avraamides M, von der Heyde M and Bülthoff HH:

"Scene Consistency and Spatial Presence Increase the Sensation of Self-Motion in Virtual Reality". Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization (APGV), 111 - 118, ACM Press, New York, NY, USA (08 2005)

Abstracts:

Meilinger T, Widiger A, Knauff M and Bülthoff HH:

"Verbal, visual and spatial memory in wayfinding". Poster presented at the 9th Tübinger Wahrnehmungskonferenz 9, 127 (2006) [ Poster ]

Journal papers:

Frankenstein J, Mohler BJ, Bülthoff HH and Meilinger T:

"Is the Map in Our Head Oriented North?". Psychological Science (accepted), (2011)

Neth C, Souman JL, Engel D, Kloos U, Bülthoff HH and Mohler BJ :

"Velocity-Dependent Dynamic Curvature Gain for Redirected Walking". IEEE Transactions on Visualization and Computer Graphics (TVCG), in press (2011)

Meilinger T, Franz G and Bülthoff HH:

"From isovists via mental representations to behaviour: first steps toward closing the causal chain" Environment and Planning B: Planning and Design advance online publication (2009). [ DOI ]

Trutoiu LC, Mohler BJ, Schulte-Pelkum J und Bülthoff HH:

"Circular, linear, and curvilinear vection in a large-screen virtual environment with floor projection". Computers and Graphics 33(1) 47-58 (2009). [ DOI ]

Meilinger T, Knauff M and Bülthoff HH:

"Working memory in wayfinding - a dual task experiment in a virtual city". Cognitive Science, 32, 755-770 (2008).

Riecke, BE, Cunningham DW and Bülthoff HH:

"Spatial updating in virtual reality: the sufficiency of visual information". Psychological Research 71(3), 298-313 (09 2006)

Riecke BE, Schulte-Pelkum J, Avraamides MN, von der Heyde M and Bülthoff HH:

"Cognitive Factors can Influence Self-Motion Perception (Vection) in Virtual Reality". ACM Transactions on Applied Perception 3(3), 194-216 (07 2006)

Riecke BE, von der Heyde M and Bülthoff HH:

"How real is virtual reality really? Comparing spatial updating using pointing tasks in real and virtual environments". Journal of Vision 1(3), 321a (May 2001).

Distler HK, Gegenfurtner KR, van Veen HAHC, Hawken MJ:

"Velocity constancy in a virtual reality environment". Perception, 29, 1423 - 1435 (2000)

van Veen HJ, Distler HK, Braun S and Bülthoff HH:

"Navigating through a virtual city: Using virtual reality technology to study human action and perception". Future Generation Computer Systems 14, 231-242 (1998)

Sellen K, van Veen HAHC and Bülthoff HH:

"Accuracy of pointing to invisible landmarks in a familiar environment". Perception 26 (suppl.), 100 B (1997)

People who are or have been involved in the realization of the Virtual Tübingen project

Conception: Heinrich H. Bülthoff, Markus von der Heyde, Hendrik-Jan van Veen, Hartwig Distler, Hanspeter A. Mallot

Modelling: Hendrik-Jan van Veen, Barbara Knappmeyer, Benjamin Turski, Stephan Waldert, Claudia Holt, Stephan Braun, Regine Frank

Software Development: Markus von der Heyde, Stephan Braun, Hartwig Distler, Boris Searles

System Administrators VR: Michael Weyel, Hans-Günther Nusseck, Stephan Braun, Michael Renner, Walter Heinz

Photographer: Rolf Reutter, Markus von der Heyde, Benjamin Turski

Psychophysical Experiments with the VT Environment: Christian Neth, Tobias Meilinger, Bernhard E. Rieke, Gerald Franz, Markus von der Heyde, Hendrik-Jan van Veen, Kirsten Sellen, Sybille Steck