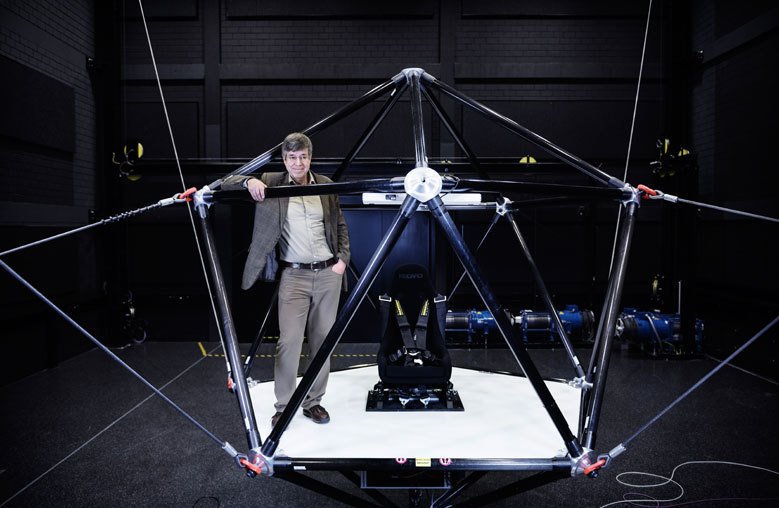

“The cable robot opens up a whole new dimension”

Max Planck director Heinrich Bülthoff on perception research with motion simulators

In the Cyberneum at the Tübingen-based Max Planck Campus, people are transported into virtual worlds in order to investigate how our brain processes impressions. A motion simulator - a cable robot - has recently been developed that makes entirely new experiments possible, says Heinrich Bülthoff, head of the Research Department. We interviewed him about the underlying research interest, the conscious and entirely real deception of human senses and cooperation with the aviation and automotive industries.

Professor Bülthoff, there are three motion simulators in the Cyberneum. What is the nature of the general interest in this research?

We want to better understand how people’s senses interact when they are interacting with their environment. A classic example is standing upright. Young children first need to learn that skill. Otherwise, they simply fall over. It’s like trying to balance a pencil on its tip. Our brain must constantly respond to signals from the eyes, the organs of equilibrium and joint receptors in order to control our muscles.

What role does motion simulation play in this?

We’re particularly interested in perception thresholds. In the case of the eye, this is very well understood, for example with regard to the minimum brightness that we’re able to perceive. By contrast, our understanding of the vestibular system, our balance organ in the inner ear, is still relatively poor. In the experiment, the subject sits in the simulator in a completely darkened space. A certain movement is initiated, and we try to determine the exact moment the subject perceives it.

So in the motion experiments, senses are purposely masked to see how it affects perception.

That’s right. Normally the senses work together very well. But if experiments are carried out in the dark or if the subject flies through a cloud in a virtual aircraft simulated by the cable robot, the sense of vision can no longer be used for orientation. Then we humans have to rely solely on our vestibular system. The vestibular system is able to measure acceleration very well, but it is not suitable for determining location. After some time, perception falls out of sync with physical reality.

What does that mean?

The vestibular system deceives us. When an inexperienced pilot flies through a cloud without instrument guidance, it tells him that he has to carry out a compensating movement to return to the horizontal position. But this movement worsens the situation. Every aircraft is therefore equipped with an instrument known as the artificial horizon. Without this visual reference, the aircraft would fall out of the cloud within around three minutes, usually resulting in a crash.

Is this knowledge a result of research with motion simulators?

No, that was already known, but we’re now trying to measure the performance of the human brain more accurately in its attempts to integrate various senses. We can build virtual reality (VR) worlds very accurately and stimulate the visual sensory channel in subjects by means of VR goggles and the vestibular system by means of the motion platform. In this way, we can investigate the interplay of senses, for example while the subject is performing control tasks. So, as the volunteers are driving or flying, we can see how well these two senses have to interact to accomplish the task.

Could you describe the tasks?

One example is flying a helicopter. Like standing upright, it takes place in a state of unstable equilibrium. If you want to hover a helicopter over a spot, you have to constantly control the joystick with your right hand, compensate for rotation around the vertical axis with the foot pedals and control the engine power with your left hand. Otherwise the helicopter will immediately swing off in a random direction. This is quite a difficult task requiring all our senses and motor coordination. And we’re now able to recreate this task very well with the new cable robot.

How so?

The cable robot has a very large range of motion that is only limited by the size of the hall. The workspace of our simulator currently measures eight by five by five metres. Conventional motion platforms, as are commonly used for flight and driving simulation, have a maximum excursion of one half to one meter in each direction.

What else distinguishes the cable robot?

We can perform very gentle movements as well as acceleration of a magnitude that was previously impossible. We’ve tested the maximum acceleration with a 75-kilogramme dummy. We can achieve up to 1.5 Gs, which is faster than any normal car. Whether the motions are slow or fast, they all distort the subjects’ perception. We start with an initial acceleration, but then we tilt the platform together with the seat back so that the subject’s head is inclined. Since the subject is pressed into the seat at the same time, their vestibular system in their head tells them that they are continuously accelerating. In this way we can simulate prolonged accelerations, despite the fact that the end of the hall was reached some time ago. Of course, the subject must not perceive the tilt; otherwise the whole experiment will break down. This is where our research into perception thresholds comes into play. Depending on the setting, a tilt of about 0.5 to five degrees per second is possible without the subject noticing it. The subject interprets the motion as linear acceleration.

So you can cover much longer distances even though the work room is only eight metres long?

It’s possible to simulate constant acceleration over longer distances. And if that takes place in virtual reality and you really see the motion because you’re driving on the road or flying through the air, you have the full impression of protracted acceleration. After all, your vestibular system says the same thing: “I'm accelerating.”

Is that also of interest to industry?

We’re doing basic research into perception thresholds and multisensory processing, but we’re also applying our findings to develop new perception-based motion simulations by improving the algorithms. Motion simulators are very widespread in the industry: for training pilots and for testing vehicles. That’s also why we’re working together with the German automotive industry. We’re increasingly applying our knowledge of information processing in the human brain to technical uses.

In which studies do you now plan to use the cable robot?

Most navigation-related questions – for example, how we orient ourselves in unfamiliar surroundings or how we find a hotel or train station again in the city – relate to two-dimensional space. That’s the framework in which we usually operate while walking or driving. A classic task of navigation research is that you run with your eyes closed in one direction. You then turn 30 degrees to the left and walk another 20 metres. Then you have to return to your starting point. We can now perform the whole thing with the cable robot in three dimensions with additional control in the up-down direction. Until now, it was impossible to conduct such experiments with a simulator. I'm curious to see how well humans will perform.

The interview was conducted by Jens Eschert